The fourth, the fifth, the minor fall and the major lift (Hallelujah, Leonard Cohen)

Next problem is extracted from MacKay’s Information Theory, Inference and Learning Algorithms:

Two people have left traces of their own blood at the scene of a crime. A suspect, Oliver, is tested and found to have type ‘O’ blood. The blood groups of the two traces are found to be of type ‘O’ (a common type in the local population, having frequency 60%) and of type ‘AB’ (a rare type, with frequency 1%). Do these data give evidence in favor of the proposition that Oliver was one of the people who left blood at the scene?

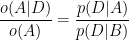

To answer the question, let’s first remember the probability form of Bayes theorem:

where:

- p(H) is the probability of the hypothesis H before we see the data, called the prior

- p(H|D) is the probablity of the hyothesis after we see the data, called the posterior

- p(D|H) is the probability of the data under the hypothesis, called the likelihood

- p(D)is the probability of the data under any hypothesis, called the normalizing constant

If we have two hypothesis, A and B, we can write the ratio of posterior probabilities like this:

If p(A)=1-p(B) (what means that A and B are mutually exclusive and collective exhaustive), then we can rewrite the ratio of the priors and the ratio of the posteriors as odds. Writing o(A) for odds in favor of A, we get the odds form of Bayes theorem:

Dividing through by o(A) we have:

The term on the left is the ratio of the posteriors and prior odds. The term on the right is the likelihood ratio, also called the Bayes factor. If it is greater than 1, that means that the data were more likely under A than under B. And since the odds ratio is also greater than 1, that means that the odds are greater, in light of the data, than they were before. If the Bayes factor is less than 1, that means the data were less likely under A than under B, so th odds in favor of A go down.

Let’s go back to our initial problem. If Oliver left his blood at the crime scene, the probability of the data is just the probability that a random member of the population has type ‘AB’ blood, which is 1%. If Oliver did not leave blood at the scene, what is the the chance of finding two people, one with type ‘O’ and one with type ‘AB’? There are two ways it might happen: the first person we choose might have type ‘O’ and the second ‘AB’, or the other way around. So the probability in this case is 2(0.6)(0.01)=1.2%. Dividing probabilities of both scenarios we obtain a Bayes factor of 0.83, and we conclude that the blood data is evidence against Oliver’s guilt.

Once I read this example, I decided to replicate it using real data of blood type distribution by country from here. After cleaning data, I have this nice data set to work with:

For each country, I get the most common blood type (the one which the suspect has) and the least common and replicate the previous calculations. For example, in the case of Spain, the most common type is ‘O+’ with 36% and the least one is ‘AB-‘ with 0.5%. The Bayes factor is 0.005/(2(0.36)(0.005))=1.39 so data support the hypothesis of guilt in this case. Next chart shows Bayes factor accross countries:

Just some comments:

- Sometimes data consistent with a hypothesis are not necessarily in favor of the hypothesis

- How different is the distribution of blood types between countries!

- If you are a estonian ‘A+’ murderer, choose carefully your accomplice

This is the code of the experiment:

library(rvest)

library(dplyr)

library(stringr)

library(DT)

library(highcharter)

# Webscapring of the table with the distribution of blood types

url <- "http://www.rhesusnegative.net/themission/bloodtypefrequencies/"

blood <- url %>%

read_html() %>%

html_node(xpath='/html/body/center/table') %>%

html_table(fill=TRUE)

# Some data cleansing

blood %>% slice(-c(66:68)) -> blood

blood[,-c(1:2)] %>%

sapply(gsub, pattern=",", replacement=".") %>%

as.data.frame %>%

sapply(gsub, pattern=".79.2", replacement=".79") %>%

as.data.frame-> blood[,-c(1:2)]

blood %>%

sapply(gsub, pattern="%|,", replacement="") %>%

as.data.frame -> blood

blood[,-1] = apply(blood[,-1], 2, function(x) as.numeric(as.character(x)))

blood[,-c(1:2)] %>% mutate_all(funs( . / 100)) -> blood[,-c(1:2)]

# And finally, we have a nice data set

datatable(blood,

rownames = FALSE,

options = list(

searching = FALSE,

pageLength = 10)) %>%

formatPercentage(3:10, 2)

# Calculate the Bayes factor

blood %>%

mutate(factor=apply(blood[,-c(1,2)], 1, function(x) {min(x)/(2*min(x)*max(x))})) %>%

arrange(factor)-> blood

# Data Visualization

highchart() %>%

hc_chart(type = "column") %>%

hc_title(text = "Bayesian Blood") %>%

hc_subtitle(text = "An experiment about the Bayes Factor") %>%

hc_xAxis(categories = blood$Country,

labels = list(rotation=-90, style = list(fontSize = "12px"))) %>%

hc_yAxis(plotBands = list(list(from = 0, to = 1, color = "rgba(255,215,0, 0.8)"))) %>%

hc_add_series(data = blood$factor,

color = "rgba(255, 0, 0, 0.5)",

name = "Bayes Factor")%>%

hc_yAxis(min=0.5) %>%

hc_tooltip(pointFormat = "{point.y:.2f}") %>%

hc_legend(enabled = FALSE) %>%

hc_exporting(enabled = TRUE) %>%

hc_chart(zoomType = "xy")